使用豆包item testitem test,生成了一个的示例用于学习。

代码:

importtorch

importtorch.nnasnn

importtorch.optimasoptim

fromtorchvisionimportdatasets, transforms

fromtorch.utils.dataimportDataLoader

# 定义超参数

batch_size = 64

learning_rate = 0.01

epochs = 5

# 数据预处理

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

# 加载训练集和测试集

train_dataset = datasets.MNIST(root='./data', train=True,

download=True, transform=transform)

test_dataset = datasets.MNIST(root='./data', train=False,

download=True, transform=transform)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False)

# 定义简单的神经网络

classSimpleNet(nn.Module):

def__init__(self):

super(SimpleNet, self).__init__()

self.fc1 = nn.Linear(28*28, 128)

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 10)

defforward(self, x):

x = x.view(-1, 28*28)

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = self.fc3(x)

returnx

model = SimpleNet()

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

# 训练模型

forepochinrange(epochs):

model.train()

forbatch_idx, (data, target) inenumerate(train_loader):

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

ifbatch_idx%100 == 0:

print(f'Train Epoch: {epoch} [{batch_idx * len(data)}/{len(train_loader.dataset)} '

f'({100. * batch_idx / len(train_loader):.0f}%)]tLoss: {loss.item():.6f}')

# 测试模型

model.eval()

test_loss = 0

correct = 0

withtorch.no_grad():

fordata, targetintest_loader:

output = model(data)

test_loss += criterion(output, target).item()

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print(f'nTest set: Average loss: {test_loss:.4f}, Accuracy: {correct}/{len(test_loader.dataset)} '

f'({100. * correct / len(test_loader.dataset):.0f}%)n')

结果输出:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

# 定义超参数

batch_size =64

learning_rate =0.01

epochs =5

# 数据预处理

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

# 加载训练集和测试集

train_dataset = datasets.MNIST(root='./data', train=True,

download=True, transform=transform)

test_dataset = datasets.MNIST(root='./data', train=False,

download=True, transform=transform)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False)

# 定义简单的神经网络

class SimpleNet(nn.Module):

def __init__(self):

super(SimpleNet, self).__init__()

self.fc1 = nn.Linear(28 * 28, 128)

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = x.view(-1, 28 * 28)

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = self.fc3(x)

return x

model = SimpleNet()

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

# 训练模型

for epoch in range(epochs):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

if batch_idx % 100==0:

print(f'Train Epoch: {epoch} [{batch_idx * len(data)}/{len(train_loader.dataset)} '

f'({100. * batch_idx / len(train_loader):.0f}%)]tLoss: {loss.item():.6f}')

# 测试模型

model.eval()

test_loss =0

correct =0

with torch.no_grad():

for data, target in test_loader:

output = model(data)

test_loss += criterion(output, target).item()

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print(f'nTest set: Average loss: {test_loss:.4f}, Accuracy: {correct}/{len(test_loader.dataset)} '

f'({100. * correct / len(test_loader.dataset):.0f}%)n')

输出:

Train Epoch: 0 [0/60000 (0%)]Loss: 2.296187

Train Epoch: 0 [6400/60000 (11%)]Loss: 1.770142

Train Epoch: 0 [12800/60000 (21%)]Loss: 0.882951

Train Epoch: 0 [19200/60000 (32%)]Loss: 0.774030

Train Epoch: 0 [25600/60000 (43%)]Loss: 0.608989

Train Epoch: 0 [32000/60000 (53%)]Loss: 0.443349

Train Epoch: 0 [38400/60000 (64%)]Loss: 0.409414

Train Epoch: 0 [44800/60000 (75%)]Loss: 0.369192

Train Epoch: 0 [51200/60000 (85%)]Loss: 0.476558

Train Epoch: 0 [57600/60000 (96%)]Loss: 0.388408

Train Epoch: 1 [0/60000 (0%)]Loss: 0.347056

Train Epoch: 1 [6400/60000 (11%)]Loss: 0.205838

Train Epoch: 1 [12800/60000 (21%)]Loss: 0.298296

Train Epoch: 1 [19200/60000 (32%)]Loss: 0.265492

Train Epoch: 1 [25600/60000 (43%)]Loss: 0.578405

Train Epoch: 1 [32000/60000 (53%)]Loss: 0.261888

Train Epoch: 1 [38400/60000 (64%)]Loss: 0.473449

Train Epoch: 1 [44800/60000 (75%)]Loss: 0.257322

Train Epoch: 1 [51200/60000 (85%)]Loss: 0.168993

Train Epoch: 1 [57600/60000 (96%)]Loss: 0.206920

Train Epoch: 2 [0/60000 (0%)]Loss: 0.192081

Train Epoch: 2 [6400/60000 (11%)]Loss: 0.396907

Train Epoch: 2 [12800/60000 (21%)]Loss: 0.361700

Train Epoch: 2 [19200/60000 (32%)]Loss: 0.290306

Train Epoch: 2 [25600/60000 (43%)]Loss: 0.214245

Train Epoch: 2 [32000/60000 (53%)]Loss: 0.360949

Train Epoch: 2 [38400/60000 (64%)]Loss: 0.281561

Train Epoch: 2 [44800/60000 (75%)]Loss: 0.380115

Train Epoch: 2 [51200/60000 (85%)]Loss: 0.323679

Train Epoch: 2 [57600/60000 (96%)]Loss: 0.289964

Train Epoch: 3 [0/60000 (0%)]Loss: 0.134732

Train Epoch: 3 [6400/60000 (11%)]Loss: 0.380322

Train Epoch: 3 [12800/60000 (21%)]Loss: 0.189159

Train Epoch: 3 [19200/60000 (32%)]Loss: 0.192855

Train Epoch: 3 [25600/60000 (43%)]Loss: 0.284537

Train Epoch: 3 [32000/60000 (53%)]Loss: 0.358586

Train Epoch: 3 [38400/60000 (64%)]Loss: 0.133981

Train Epoch: 3 [44800/60000 (75%)]Loss: 0.117453

Train Epoch: 3 [51200/60000 (85%)]Loss: 0.245508

Train Epoch: 3 [57600/60000 (96%)]Loss: 0.117379

Train Epoch: 4 [0/60000 (0%)]Loss: 0.198738

Train Epoch: 4 [6400/60000 (11%)]Loss: 0.287366

Train Epoch: 4 [12800/60000 (21%)]Loss: 0.390377

Train Epoch: 4 [19200/60000 (32%)]Loss: 0.242391

Train Epoch: 4 [25600/60000 (43%)]Loss: 0.180091

Train Epoch: 4 [32000/60000 (53%)]Loss: 0.191751

Train Epoch: 4 [38400/60000 (64%)]Loss: 0.172341

Train Epoch: 4 [44800/60000 (75%)]Loss: 0.284955

Train Epoch: 4 [51200/60000 (85%)]Loss: 0.119116

Train Epoch: 4 [57600/60000 (96%)]Loss: 0.200912

Test set: Average loss: 0.0028, Accuracy: 9466/10000 (95%)代码解释:

数据预处理:利用对数据进行预处理,将图像转换为张量并进行归一化。

加载数据集:使用.加载 MNIST 数据集,再用进行批量加载。

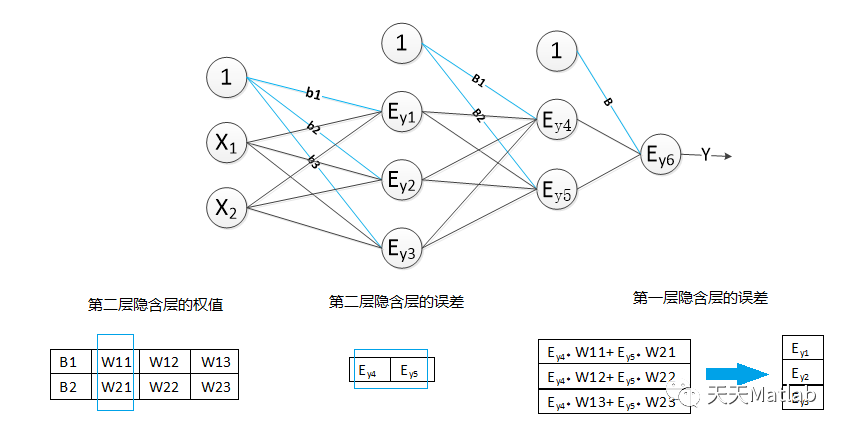

定义神经网络:构建一个简单的全连接神经网络,包含三个线性层。

定义损失函数和优化器:采用交叉熵损失函数nn.和随机梯度下降优化器optim.SGD。

训练模型:在多个轮次中对模型进行训练,每 100 个批次打印一次训练损失。

测试模型:在测试集上评估模型的性能,输出平均损失和准确率。

限时特惠:本站持续每日更新海量各大内部创业课程,一年会员仅需要98元,全站资源免费下载

点击查看详情

站长微信:Jiucxh

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。